Why should I be an Internet police: my father took a child's lesion and was sentenced to pornography

Author:Shell net Time:2022.08.26

Google has a big trouble.

In February 2021, under the instruction of the doctor, the Americans Mark took a few photos of the genitals of swollen and painful her son with their Android phones, sent a few photos to the nurse to let the other party judge the condition. The subsequent diagnosis and treatment went smoothly, and Mark's son was almost okay.

But please find Mark. After being uploaded to Google album in synchronization, the photos were marked as "sexual assault content" by Google's AI tools. Soon children's protection organizations involved and began to investigate the incident, and further confirmed that these photos were suspected of "children's pornography".

The case was submitted to the local police. A week later, the police knocked on Mark's house. At the same time, Google completely banned Mark's account.

Make mistakes AI

The "culprit" of this oolong was Google's image recognition AI.

It is not new to use AI to prevent and combat children's crimes. As early as 2009, Microsoft had developed a tool called Photodna to help trace back and block the spread of children's pornographic content.

The core principle of Photodna is a technology called "Robust Hashing". Under normal circumstances, any computer file, after the hash algorithm, will only draw a unique "hash value", which is often described as "data fingerprint". But the algorithm adopted by Photodna can get a broader hash result.

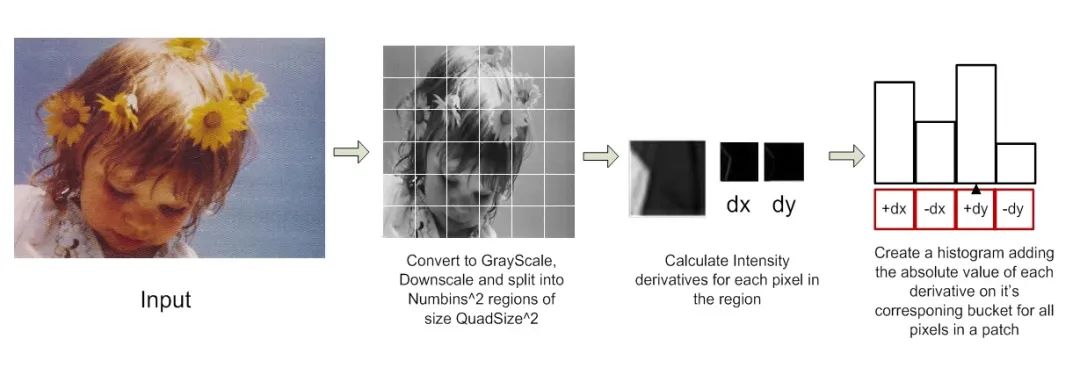

Simple principle used by robes for photos 丨 Picture source TechCrunch

Specific to the application level, Photodna can calculate a broad -hash result based on an existing child porn picture. After that, even if this picture is cut and edited, it will still retain part of the characteristics of the original picture, and the corresponding hash results can match the original picture. With a more intuitive analogy, just like the characteristics of only "half" fingerprints, it is matched with the original fingerprint and dates back to the owner. In this way, technology companies can trace and block the spread of such content.

On the basis of Photodna, Google's AI tools go further. It uses the known sexual assault content of children as a sample to conduct deep learning. The characteristics of generalization, such as naked, specific organs, and postures. Google's AI can directly identify all "images of children's pornography and abuse", even if this image has been uploaded to the Internet for the first time.

In 2018, Google launched this AI tool and provided it to a series of companies such as Facebook. This technology is regarded as a major breakthrough and becomes a weapon to crack down on childhood crimes.

The United States has a severe blow to children's crimes. Relevant federal laws stipulate that citizen entities are obliged to report the relevant clues of child sex crimes. Therefore, after the launch of Google's algorithm, the company immediately established a set of "submission mechanisms" to report the relevant clues of the content and submit it to the children's protection agency.

The work of children's protection agencies has also made breakthroughs. Prior to this, most of the clues of children's crimes relied on "telephone reports", because children's crimes often occurred in private places and family. It was very difficult to conduct investigations and evidence collection.

However, with the relevant AI tools, the difficulty is greatly reduced from investigation to evidence collection. According to data from the National Missing Children's Assistance Center, in 2021, by AI identification and reporting clues, 4,260 potential child victims were found.

However, no matter how advanced AI will make mistakes. Whether it is a technical BUG or a factual misjudgment.

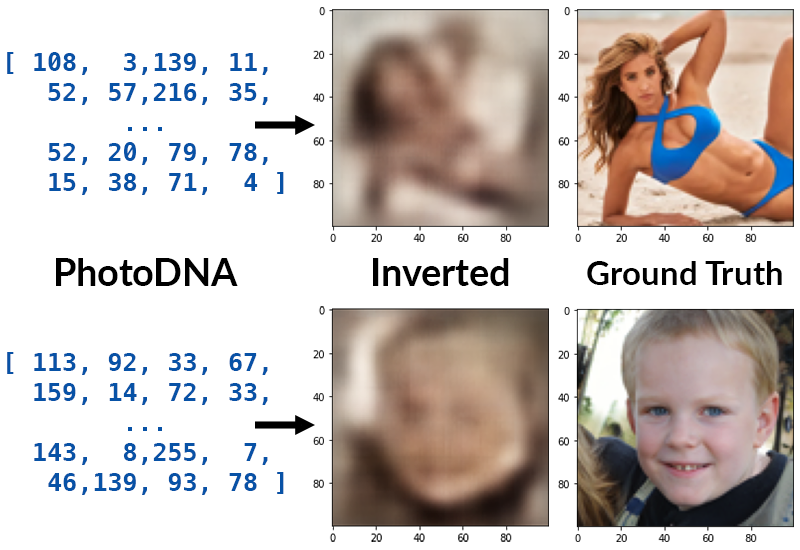

In response to such "robbers' algorithms", many technicians are trying to "reverse" their dead ends. For example, through the reverse algorithm, a picture is generated similar to the original picture, but there are pictures that are different in nature. After input, it will be recognized by AI as the "hash results" that is the original picture. This is "AI's bug".

A technician of MIT proposes a reverse method named Ribosome (ribosome), which regards Photodna as a black box and uses machine learning to attack the hash algorithm 丨 Picture source network

The problem that is more likely to occur is a misjudgment at the factual level. In the Mark incident, there was no error in AI's recognition (the photo taken by Mark was indeed a close -up of his son's genitals), but because he did not understand the background of the incident, Oolong occurred.

Many views believe that even if there are accidental oolongs, it cannot deny the positive significance of AI tools on cracking down on children's crimes. However, technology companies, children's protection organizations, and law enforcement agencies also need to establish a more flexible system to help innocent people like Mark.

How to correct it?

Mark quickly got rid of suspicion. Because the case was not complicated, he provided a record of communicating with the hospital doctors and nurses that night to the alarm, proved that "the photo is for the sake of the child for medical treatment", and the police quickly revoked the investigation.

When Mark submitted the relevant information and hoped to restore his Google account, he was rejected. After AI marked the photo as "Children's Porn", Google conducted further investigations on the account. In the process, it was found that Mark's son and wife were all lying on the bed naked. It is also judged to be "suspected of children's violations." Cracked children's abuse and trafficking 丨 Picture Source NCMEC

For similar family privacy content, almost everyone cannot accept that they are placed on the screen of an artificial auditor and are examined. The efficiency of manual review and high cost, which also involves complex privacy issues. Technology companies would rather give "review" to AI.

Many technology companies are "dilemma" on AI.

In 2019, the Guardian reported that in Siri's "improvement plan", Apple hired a third -party company data marker to listen to the "original sound" of user voice instructions. This work was originally aimed at Siri that it could not recognize the voice instructions. After the real people heard it, they added and modified the "samples" of the voice recognition database. However, in these "instructions", it contains many user recording after triggering Siri, involving a lot of privacy information, such as commercial transactions, medical communication, and even crimes and sexual behavior. After the incident broke out, Apple quickly stopped related projects.

Last fall, Apple was also preparing to deploy a "hash test algorithm" similar to Photodna for the iCloud photo system. However, because of the opposition of privacy groups, the plan is planned to be put on hold.

Mark's oolong, no "person" listened, it is not difficult to understand that he was innocent, so the police soon returned him innocent. But for technology companies, many times, letting "people" involve these things is not only high -cost and inefficient, but also involves morality, privacy and ethics.

In the complex real world, people need AI help, and AI also needs people's error. But how the two cooperate are still a problem.

Be responsible for each other

The account is blocked for Mark.

He used to be a loyal Google family bucket user. After being blocked, the mailbox and Google Fi mobile phone number could not be used. It was almost "broken" with the outside world. Emails, albums, and calendar data for many years could not be retrieved.

Mark had thought of suing Google in the court, but after consulting lawyers, he found that the relevant prosecution fee and lawyer fee needed about $ 7,000, and he could not guarantee the victory. After consideration, he gave up the plan.

Mark's encounter is not an example. The New York Times reported that Mark encountered it, and also discovered another "victim" Cassio. His encounters were almost consistent with Mark. It was also because of the private parts of his son. Banned.

In hundreds of thousands of related reports each year, hundreds of thousands of similar "accidental injuries" may exist. AI can identify children's private parts, but it is difficult to identify whether this is "prelude to sexual assault" or "parents help their children bath".

Google cannot determine what parents "do" to children 丨 Image source UNSPLASH

Compared to restoring an account, it is more difficult for Google to re -examine these contents from the perspective of "public ethics". Therefore, it directly throws the problem to AI, and AI adopts the policy of exposing children's "zero tolerance". Until the incident was reported by the media, Google still insisted on his own approach: it would not unblock the two innocent father involved in the report.

Whether it is the academic or technical circles, when evaluating this incident, people almost affirm the role of AI tools in cracking down on children's crimes. At the same time, they also think that AI needs a set of error correction mechanisms.

This problem is by no means no solution. The key to the matter is how to take the initiative to take responsibility for all parties (especially large companies), rather than excessive relying on technical tools.

People and AI have to be responsible for each other.

Author: jesse

Edit: Shen Zhihan

- END -

Heavy planning!Involved in Shushan, Gaoxin, Luyang, Baohe, etc.

recentlyThe highly anticipated blueprint of HKUST Silicon ValleyPioneerThe Impleme...

I heard that in the future, only $ 100 of genes!

Text | Xu RuiRecently, a young company named Ultima Genomics stated at the genomic ...