Auxiliary driving faults cause a car accident, and the owner is responsible. Do you dare to use this function?

Author:Shell net Time:2022.08.14

Another car accident occurred in the auxiliary driving mode.

On the afternoon of August 10, a Xiaopeng P7 electric vehicle hit a fault vehicle temporarily stopped at the roadside at a speed of 80km/h at an elevated bridge in Ningbo. Video of road surveillance and driving recorder shows that the accident car has almost no deceleration and steering when the vehicle is close to the faulty vehicle, and it bumped straight into the front, causing serious damage to the rear of the front vehicle and the person standing behind the rear of the car unfortunately died.

Mr. Wang, the owner of the accident, told Red Star News that when the incident, he was using the LCC (auxiliary lane in the lane) function of the electric car, but the function failed to identify the people and cars in front of them. There was no warning before the impact. The running god did not respond under the assisted driving state, and eventually caused a car accident.

On August 11, Xiaopeng Automobile's official responded to the accident, saying that the traffic police department had filed a case, and Xiaopeng would also fully cooperate with relevant departments to conduct an accident investigation and continue to follow up the subsequent results.

This tragedy is not unprecedented, and the same incident has already occurred.

On March 13 this year, Xiaopeng P7 car owner Deng had a serious car accident on the Hunan-Yueyang high-speed road section. During the car accident, the LCC function was also opened. vehicle. Mr. Deng said in social media: "Before the impact, the vehicle had no deceleration, the warning of the collision warning, or the signs of emergency braking ... I think there is a fatal safety hazard in the auxiliary driving function."

Frequent car accidents, can the owners still associate with the major events of their lives to the auxiliary driving system?

Can the LCC mode liberate the owner?

The core LCC of the dispute between the accident is a function that can help the vehicle in the middle of the lane in the middle of the lane. On the road with a clear lane line, if the vehicle deviates from the center of the lane, the system will automatically apply to assist the vehicle back to ensure driving safety. When the driver starts to change the aisle, the system will automatically exit to avoid intervention of the driver's operation.

When the LCC is turned on, the outside car camera will detect the lane lines so that the vehicle will drive at the center of the lane in the center of the lane. At the same time, the camera and millimeter wave radar will judge the distance and near front of the vehicle, and automatically keep a safe distance.

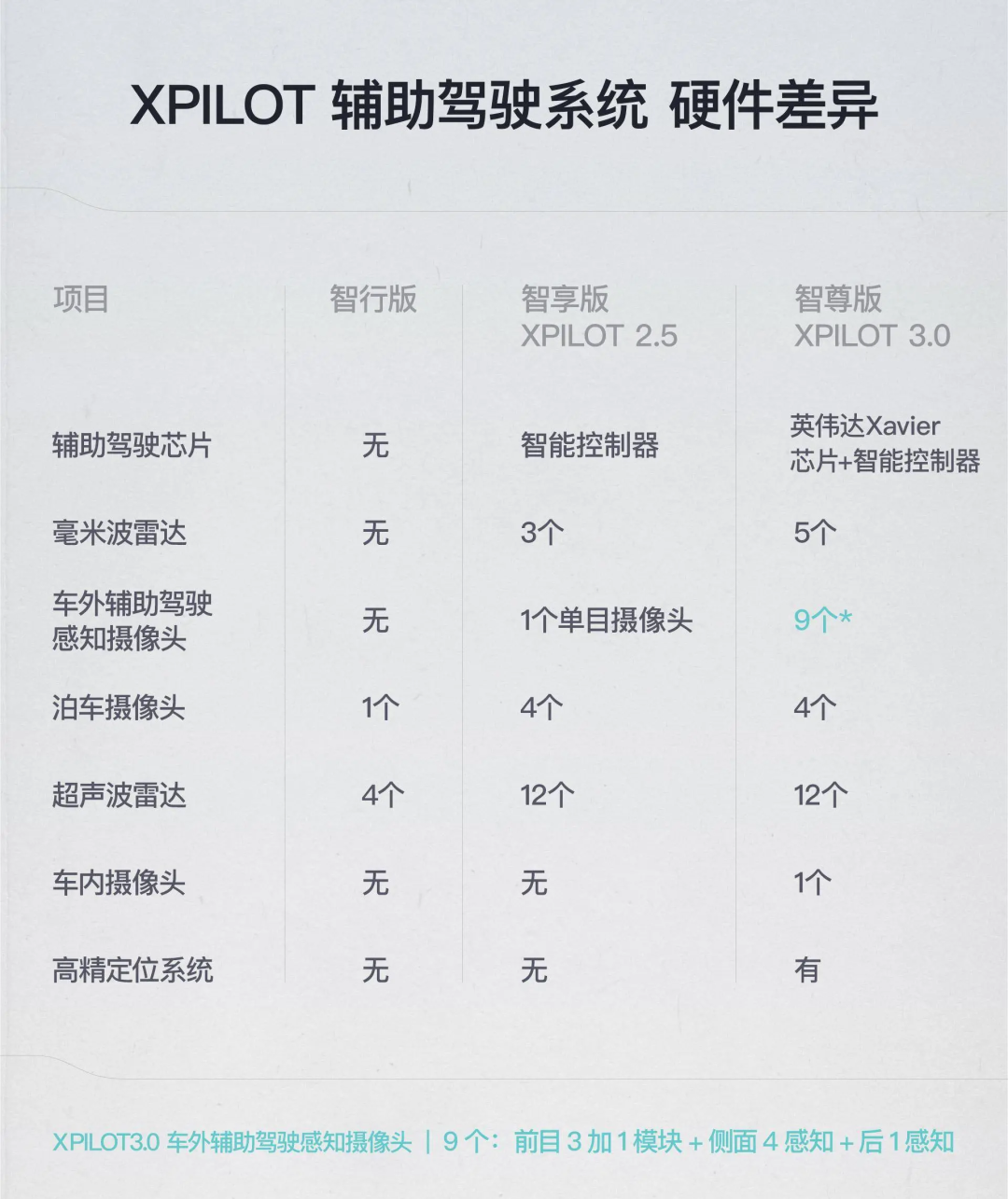

According to the official website, Xiaopeng's highest -matching assistance system XPILOT 3.0 currently uses Nvidia Xavier chip+smart controller dual combination, 5 high -fine millilitrim radar, 13 cameras outside the car, and there is a camera inside the car. A total of 14 cameras.

Xpilot different versions of hardware configuration differences | Xiaopeng official website

But even the highest matching XPILOT 3.0 system has a fatal shortcoming.

The combination of cameras+millimeter wave radar is difficult to identify non -standard static objects, and it is particularly obvious in high -speed scenarios. Because there is no phase difference between the reflex wave of static objects, the information of the static target is easy to mix with the nearby interference target signal, which makes the radar unable to accurately identify. At the same time, the algorithm of "static miscellaneous wave filtering" will also filter out some static objects. This may lead to misjudgment or ignoring the static vehicles and pedestrians in front.

Significance of millimeter wave radar work diagram

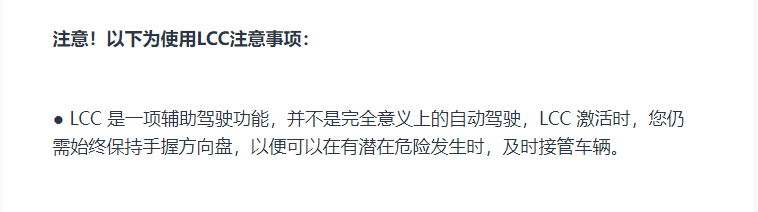

In the various introductions of LCC, Xiaopeng Automobile labeled "When the lane is in the middle of the LCC), the driver still needs to keep the steering wheel holding the steering wheel and take over the steering wheel if necessary" and "LCC activation, you still need to always always be Keep the steering wheel holding the steering wheel so that you can take over the vehicle in time when there is a potential danger.

XPILOT Assistant's LCC introduction article | Xiaopeng Community

Auxiliary driving fails, the owner is responsible

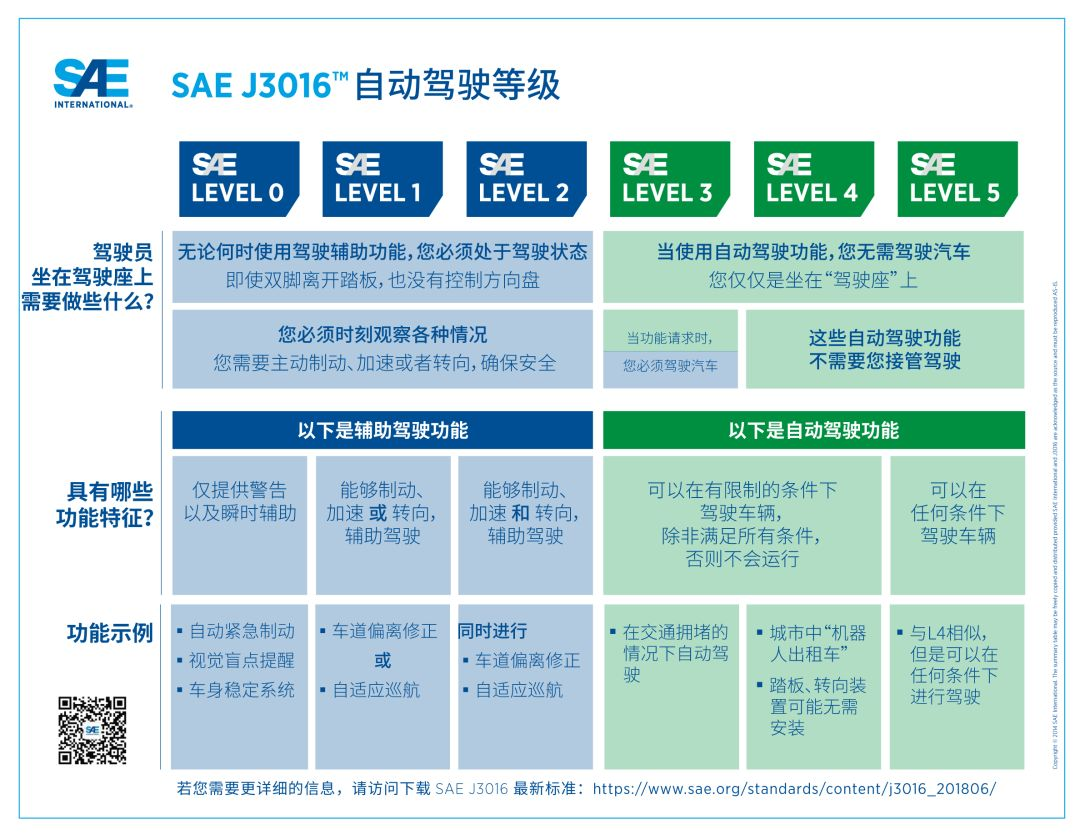

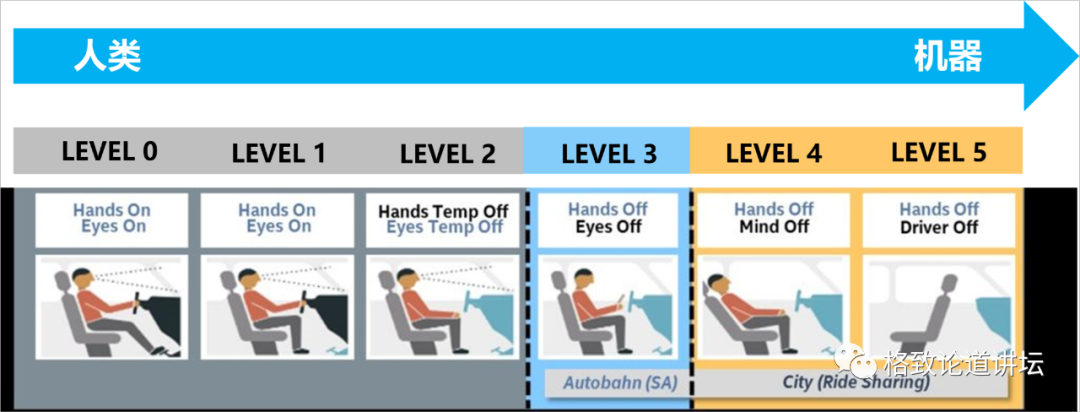

According to the autonomous driving grading system proposed by the US Highway Safety Administration (NHTSA) and the American Automobile Engineers (SAE), autonomous driving is divided into five levels: L0, L1, L2, L3, L4, L5 Driving, the L4-L5 is an autonomous driving that does not need to take over, and the L3 level of autonomous driving, the owner needs to take over the car when the functional request.

SAE autonomous driving level | SAE

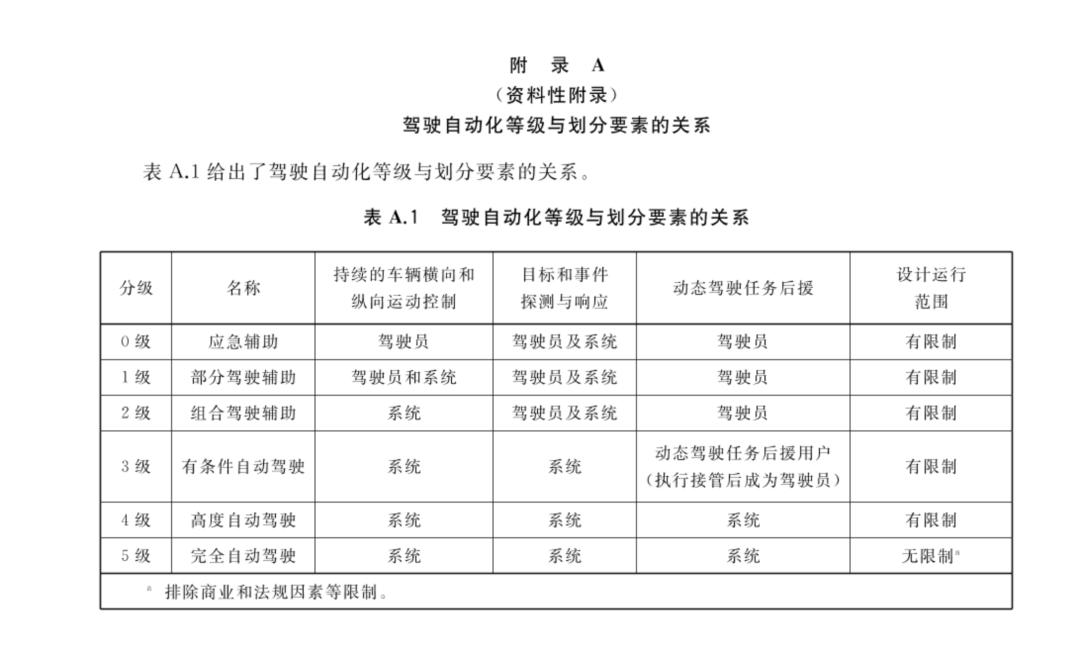

In March of this year, the State Administration of Market Supervision and Management and the National Standardization Management Committee of China also launched the national recommendation standards for the "Automation of Automotive Driving" applicable to my country.

"Car Driving Automation Tie" | National Standards Committee

Car companies believe that accidents occur in the auxiliary driving mode and are responsible for the owner.

Xiaopeng constantly emphasized in the user manual that the owner cannot rely on the ACC/LCC system, and needs to prepare manual intervention at any time, and listed the scene where various systems may fail, including the unable to detect static vehicles and objects. Even if the auxiliary driving system fails when the accident occurs, because the user manual has emphasized that the owner's hands must not leave the steering wheel, and you need to pay attention to taking over the car at any time. Therefore, the owner still needs to bear the main responsibility.

Xiaopeng User Manual | xiaopeng.com

From the perspective of laws and regulations, in the domestic traffic accident identification, the responsible subject of assisting driving cars is also drivers.

On August 1, the "Regulations on the Management of the Intelligent Connected Automobile of the Shenzhen Special Economic Zone" (referred to as the "Regulations") was implemented. The three types of autonomous driving and complete autonomous driving are also regarded as the first L3 -level regulations in China.

The "Regulations" stipulates that the traffic violations with the driver's intelligent connected cars shall be punished by the traffic management department of the public security organs in accordance with the law. If the corresponding damage compensation liability shall be assumed; if a traffic accident is caused by the quality defect of the intelligent connected car, the driver may be charged with the producers and sellers of the intelligent connected car after the driver shall bear the liability for damages in accordance with the law. In other words, according to the current law, the driver can recover compensation from the car company although the driver is responsible for the traffic accident caused by the assistance of the driving function.

Although according to the user manual, Xiaopeng P7's auxiliary driving system belongs to L2 level, searching "Xiaopeng P7 L3" in Baidu and querying the search results before August 9 (the day before the accident), you will find that there are many publicity on the Internet. Xiaopeng P7 has an article with L3 -level autonomous driving, and consumers are likely to overwhelm the auxiliary driving function of the car too much, so as to take it lightly when driving.

For ordinary car owners, it is difficult to distinguish what is the real L3 | Baidu screenshot

The same is true abroad. Even if the vehicle system fails, the legal responsibility is often on the driver. Judging from the previous jurisprudence, assisting driving can not be regarded as a good friend of the drivers, and the driver must beware of accidents at all times.

In June 2016, the 40 -year -old American driver Joshua Brown was placed in Autopilot (automatic driving) mode when placing Tesla Model S in Autopil S, because the sensor could not recognize the white truck under the bright sky in front of the sky, And crashed and died. Joshua became the first person to die in a car accident.

Joshua's life website photo | Facebook

Tesla's subsequent event statement emphasized that the Autopilot function was not perfect, and the owner still needed to take over at any time to clarify the company's responsibility: Autopilot "is an auxiliary function that requires your hands to always be placed on the steering wheel", and on the steering wheel ", and in When using, "you need to keep the control and responsibility of the vehicle."

At the end of 2019, Tesla Model S, driven by American driver Kevin George Aziz Riad, rushed out of the California highway in the Autopilot mode, ran through red lights, hit another car, causing two people in the car. In January of this year, the prosecutor of Los Angeles had put forward the allegations of "crime of losing homicide" and "crime of murder of traffic accidents", which is 27 -year -old. The case was also the first criminal prosecution involving Tesla Autopilot's dysfunction, and Tesla disappeared when it was required.

We are far from "real automatic"

From the beginning of the concept of autonomous driving to the reality of L2 to L2, from the beginning of high songs to the beginning, to now, the promotion of "autonomous driving" has quietly changed its mouth to "auxiliary driving". This is because L3's watersheds will allow car companies to bear more risks of accountability.

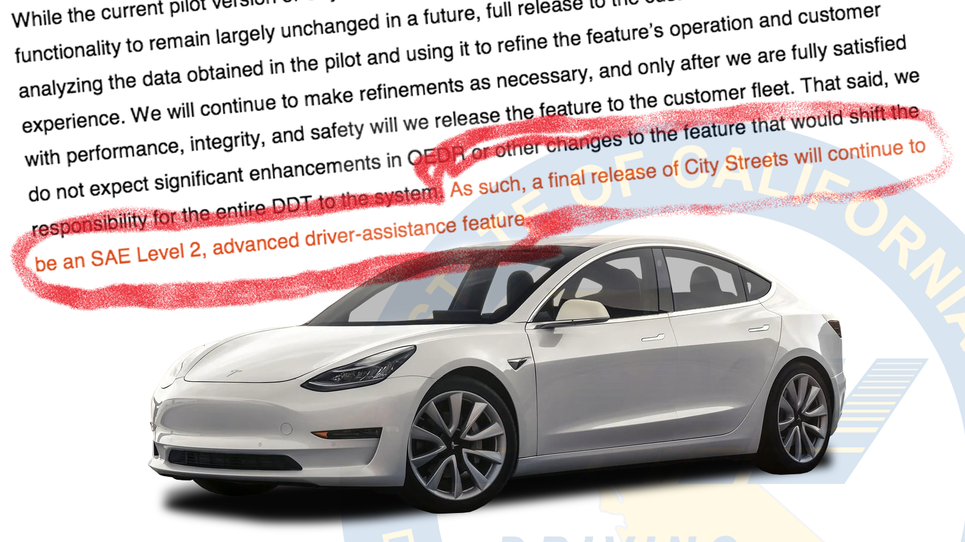

As a pioneer of autonomous driving, Tesla was the first. According to CNBC reports on August 5, the California Motor Vehicle Administration is accusing Tesla's false publicity in autonomous driving. California Automobile Management believes that Tesla refers to the auxiliary driving system as AutoPilot (literally of autonomous driving) and FSD (abbreviation of fully autonomous driving systems), which is "fully automatic", which is an exaggerated publicity. Tesla has responded to the investigation for two weeks, otherwise it may be temporarily losing its business permit in California.

Tesla and California Automobile Management Bureau previously exposed emails | DMV

The tug -of -tug process in this caliber is also the hovering of whether humans want to hand over the control of control to the machine.

If a small branch revealed the road and there are no vehicles, we will simply change to the opposite lane and bypass. However, self -driving cars may stop completely because it has duty to abide by traffic regulations that prohibit the double yellow line. This unexpected move avoids hitting the front objects, but it will collide with the human driver behind.

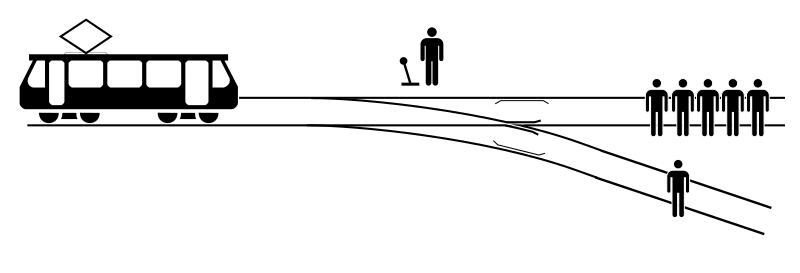

Another example is the classic tram problem: a out of control tram is about to crush and kill five people standing on the railroad rail. You can drive the switch and let the tram enter another track with only one person and sacrifice one to save five people. Or threw away the switch and died at any. How can humans still be unable to judge ethical problems?

Tram problem

It was released in 2004. In the movie "Me, Robot" adapted from Asimov's novel "Me, Robot", the male lead played by Will Smith is very hated to artificial intelligence robots because in a car accident he experienced a few years ago a few years ago The robot who came to the rescue gave up another 12 -year -old girl due to the low survival rate and chose to rescue Sptner. In the case of life, the test of autonomous cars may only be greater.

"Me, Robot" stills

It is also possible that in a car accident, it is against ethics to control their own destiny. Engineers developed autonomous driving algorithms based on their moral norms and concepts, and their decisions affect the lives of drivers, passengers and passers -by. Policy makers and governments have also tried to formulate rules and regulations for autonomous vehicles, adding additional impacts on drivers, passengers and passers -by.

Germany tried to use the preparation guidelines to solve the ethical problem of autonomous vehicles. The German government proposes that "self -driving cars should always try to minimize people's death, and should not discriminate against individuals according to age, gender or any factors. Human life should always be preferred in animals or property." Judging from a research published by Science magazine, more than 75%of participants support this practicalism. In terms of technical and ethical issues, we may have to rest assured to hand over all control to the machine and get real autonomous vehicles. Hope that there will be fewer and fewer tragedies on this development path.

references

[1] Lin, P. (2013). The ethics of Autonomous Cars. Retrieve 12 August 2022, from https://www.theatlantic.com/technology/archive/2013/the-ethics-Autonomous- /280360/

[2] Lambert, Fred, 2022. "Tesla is in Hot Water with California DMV Over ITS Autopilot and Self-Driving Claims". Electrek. Https://electrek/2022/08/tesla-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water-water, he California-dmv-Autopilot-Self-Driving-Claims/.

[3] "The Ethics of Self-Driving Cars". 2020. Medium. Https://towardsdatascience.com/the-thics--set-driving-caaaaaf9e320.

Author: Shashi Billy

Edit: Turn over

- END -

How to make autonomous driving more reliable?

We are under actual conditions,It cannot be improved infinitelyAutonomous driving ...

Implement tomorrow!Chengdu encourages traditional cars to exchange new energy vehicles for rewarding 2000-8000 yuan/vehicle

Cover reporter Lai FangjieLow -carbon Chengdu, Chengdu is accelerating the constru...