Google AI can’t understand netizens' comments, and it will be as high as 30%wrong. Netizens: You don’t understand my stalk

Author:Quantum Time:2022.07.18

Pine sent from the quantity of the Temple of Temple | Public Account QBITAI

Give you a few words, come to taste the emotions they contain:

"I really thank you."

"Listen to me, thank you, because you have you, warm four seasons ..."

Maybe you would say, this is very simple, isn't it the stalk that has been played recently?

But if you ask the elders, they may be a pair of "Subway Elderly Watching the phone".

However, there is a generation gap between the popular culture, which is not limited to the elders, but also AI.

No, a blogger recently posted an article to analyze Google Data Set, and found that in the emotional judgment of Reddit's comment, the error rate was as high as 30%.

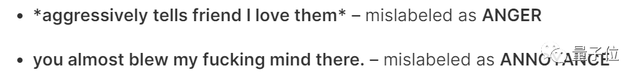

For example, this example:

I want to express my love for him angrily.

Google data set judged it as "angry".

There is also the following comment:

You TM almost scared me.

Google Data setting it as "confusion".

Netizens shouted: You don't understand my stalk.

Artificial intelligence becomes artificial mental retardation. How does it make such an outrageous mistake?

Break the context and righteousness it is the most "master"

This has to start with the way he sentences.

When the Google dataset labels the comments, the text is judged.

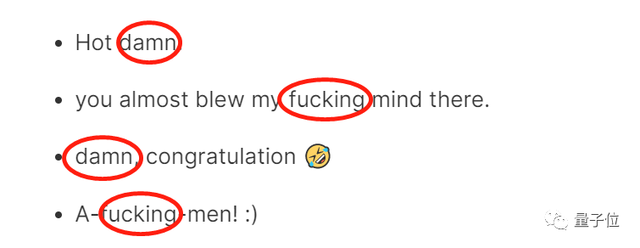

We can take a look at the following picture. Google datasets are wrongly judged in the text into anger.

It is better to speculate about the reason why the Google Data setting is wrong, and take the example above. There are some "swear words" in these four comments.

Google data sets use these "swear words" as the basis for judgment, but if you read the full comments carefully, you will find that this so -called "basis" is only used to enhance the tone of the entire sentence and has no practical significance.

Comments published by netizens often do not exist in isolation. Factors such as the posts and published platforms may cause the entire semantics to change.

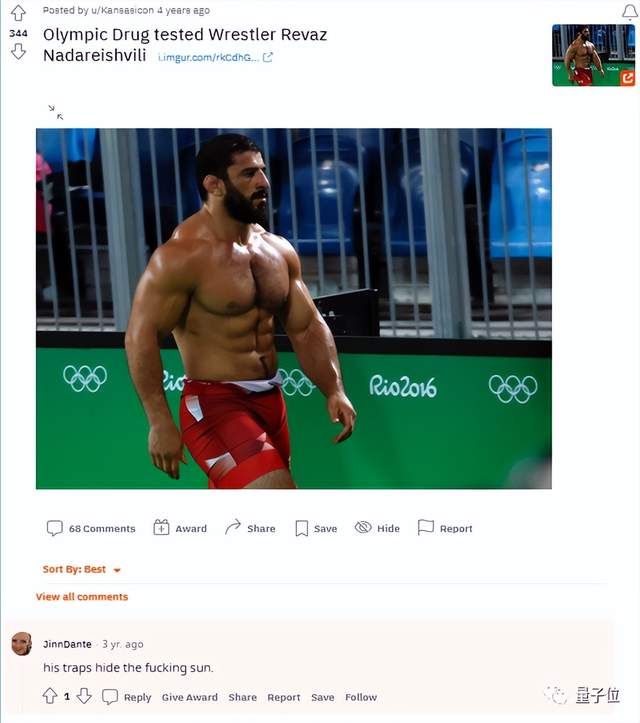

For example, just look at this comment:

HIS Traps hide the fucking sun.

It is difficult to judge the emotional elements in it alone. But if you know that he is a comment from a muscle website, it may not be difficult to guess (he just wants to praise the man's muscle).

The post of ignoring the comment itself, or to judge that the emotional elements of one of the emotional colors are unreasonable.

One sentence does not exist in isolation. It has its specific context, and its meaning will change with the change of context.

Putting the comments into a complete context to judge its emotional colors may greatly improve the accuracy of judgment.

However, 30%of such a high mistake is not just "disconnecting", there are deeper reasons.

"Our stalk AI does not understand"

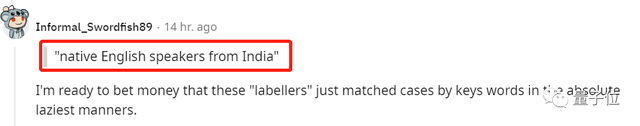

In addition to the context interference data setting, cultural background is also a very important factor.

To the country and regions, the small to the website community will have its own internal exclusive cultural symbols. It is difficult for people outside this cultural symbol to interpret it, which makes a tricky question:

If you want to judge the emotions of a community comment more accurately, you must conduct some data training on its community targeted to understand the cultural genes of the entire community.

On the Reddit website, netizens commented that "all the scoreers are Indians who use English as their mother tongue."

This will cause misunderstandings to some common habits, tone words and some specific "stalks".

Having said so much, the reason for the error of the data set is so high.

But at the same time, the accuracy of improving AI to judge emotions has also been clearly directed.

For example, bloggers also gave several suggestions in this article:

First of all, when labeling the review stickers, you must have a deep understanding of his cultural background. Taking Reddit as an example, to judge the emotional colors of its comments, thoroughly understand some of the cultural and politics of the United States, and be able to quickly get to the "stalk" of the exclusive website;

Secondly, whether the tags are correct for some irony, practicing, and stalks to ensure that the model can understand the meaning of the text in a complete understanding;

Finally, check the model judgment with us to make feedback and better train the model.

One more thing

AI Daniel Wu Enda has launched a data -centric artificial intelligence movement.

The focus of artificial intelligence practitioners is transferred from model/algorithm development to the quality of data they use for training models. Wu Enda once said:

Data is artificial intelligence food.

The quality of training data is also vital to a model. In the emerging data -centric AI method, the consistency of data is very important. In order to obtain the correct results, the quality of the data is needed to fix the model or code iteratively.

Native

In the end, what else do you think there are any ways to improve language AI to judge emotions?

Welcome to discuss in the message area ~

- END -

Provincial Agricultural Inspection Center came to Jiuquan City to conduct a second livestock and poultry product quality and safety routine sampling

Promote the healthy development of the grass and livestock industryProvincial Agricultural Inspection Center came to Jiuquan City to conduct a second livestock and poultry product quality and safety r

The blue sky and white clouds are back!The air quality of Baoding in the first half of the year has been improved again

On July 6, Baoding City held a press conference.It is reported that the air qualit...